ICP Back Propagate fix

A Error at ICP iterations

import cv2

import numpy as np

import matplotlib.pyplot as plt

from sklearn.neighbors import NearestNeighbors

def icp(a, b, init_pose=(0,0,0), no_iterations = 13):

src = np.array([a.T], copy=True).astype(np.float32)

dst = np.array([b.T], copy=True).astype(np.float32)

Tr = np.array([[np.cos(init_pose[2]),-np.sin(init_pose[2]),init_pose[0]],

[np.sin(init_pose[2]), np.cos(init_pose[2]),init_pose[1]],

[0, 0, 1 ]])

src = cv2.transform(src, Tr[0:2])

for i in range(no_iterations):

nbrs = NearestNeighbors(n_neighbors=1, algorithm='auto',

warn_on_equidistant=False).fit(dst[0])

distances, indices = nbrs.kneighbors(src[0])

T = cv2.estimateRigidTransform(src, dst[0, indices.T], False)

src = cv2.transform(src, T)

# Here is the main wrong code

Tr = np.dot(Tr, np.vstack((T,[0,0,1])))

return Tr[0:2]

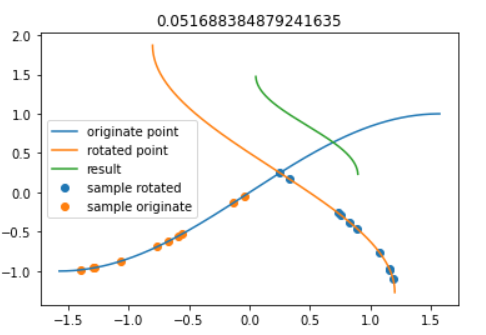

- Wrong phenomenon

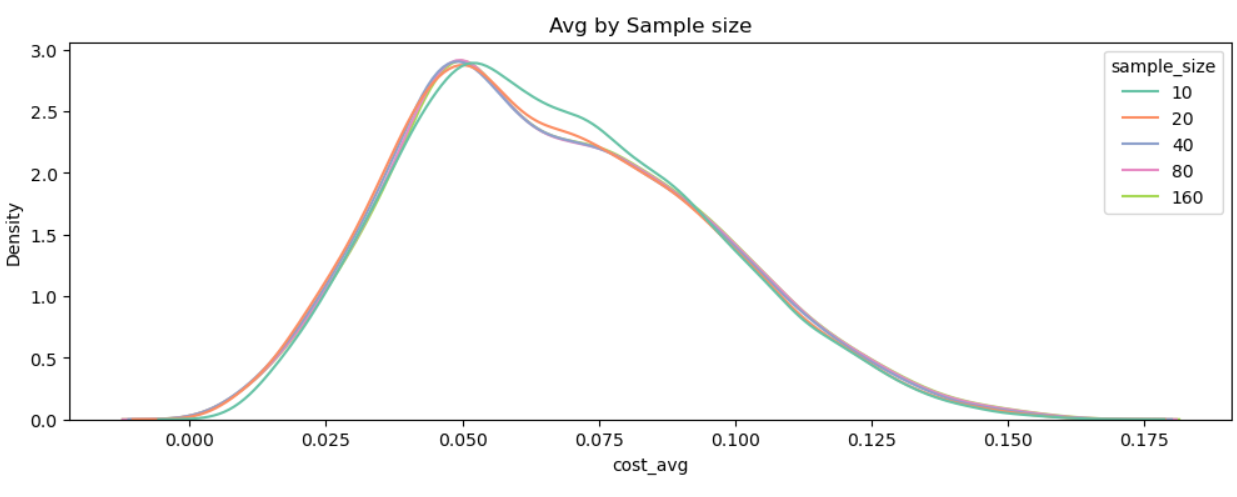

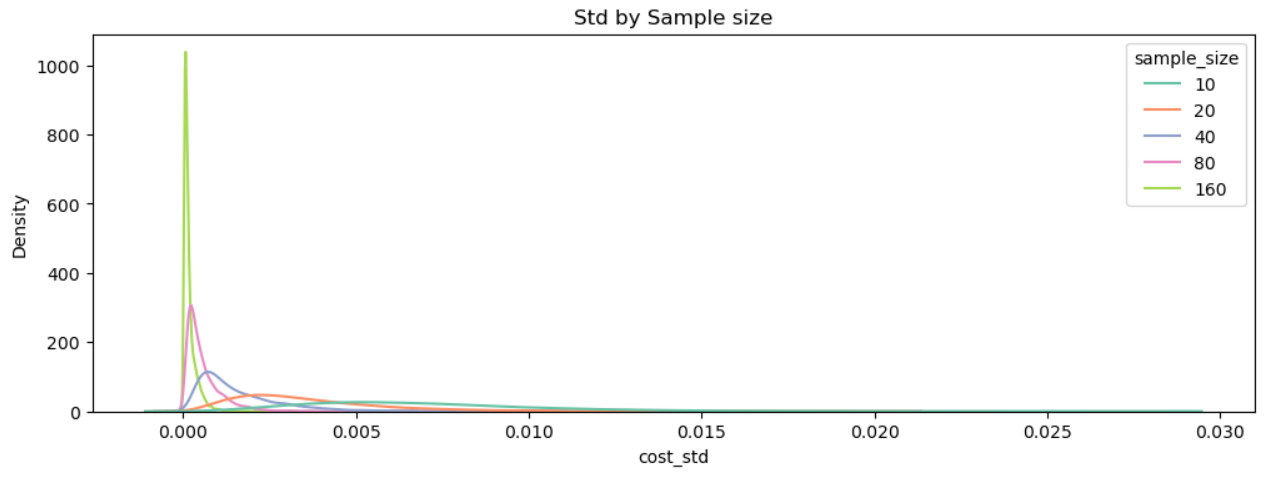

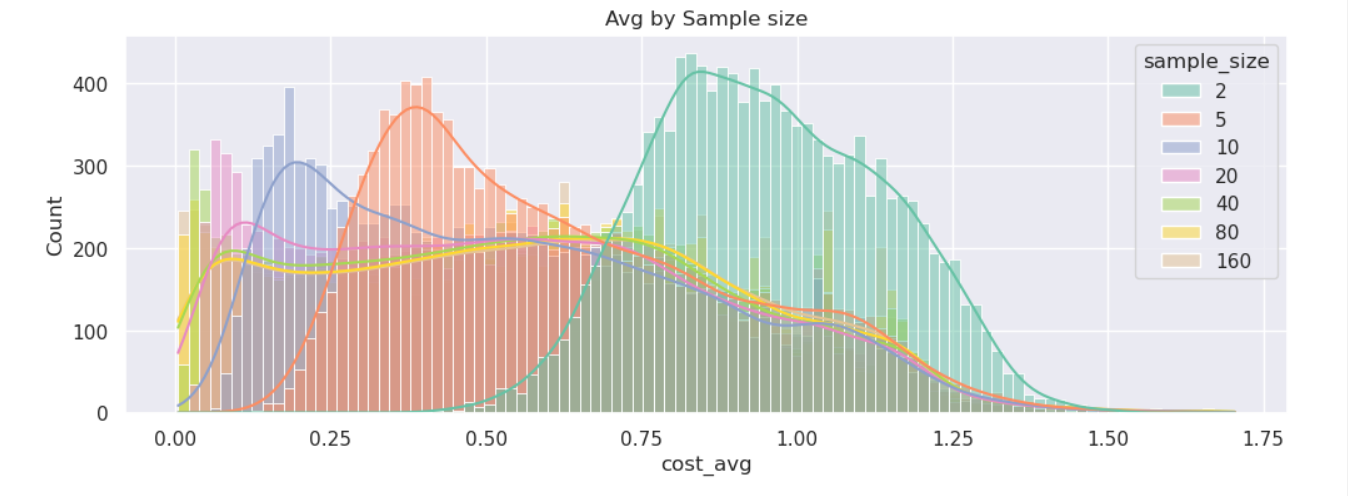

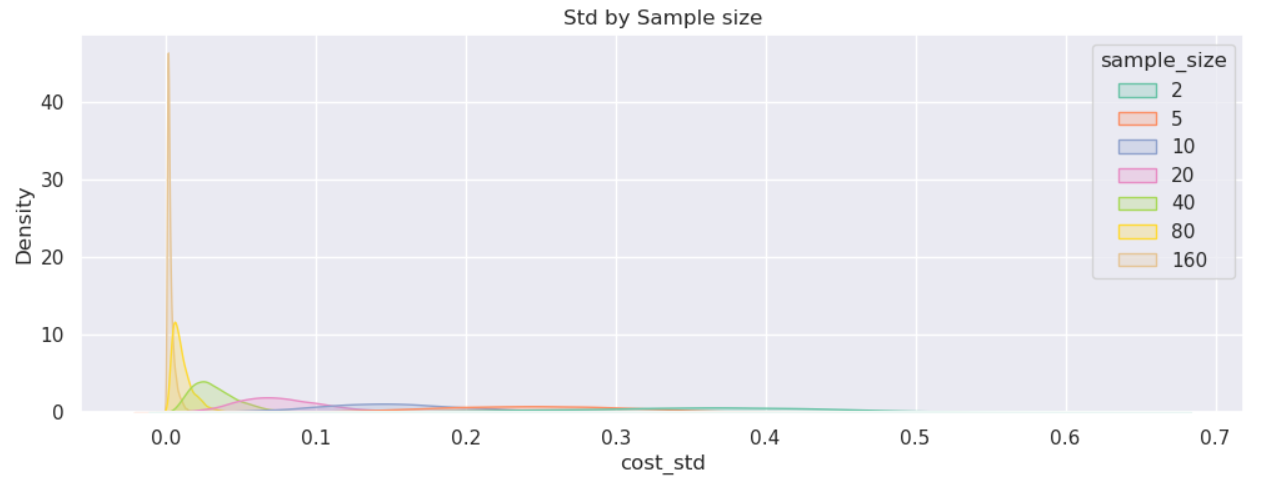

- Deception estimate[有偏估计]

- Difference of sample size

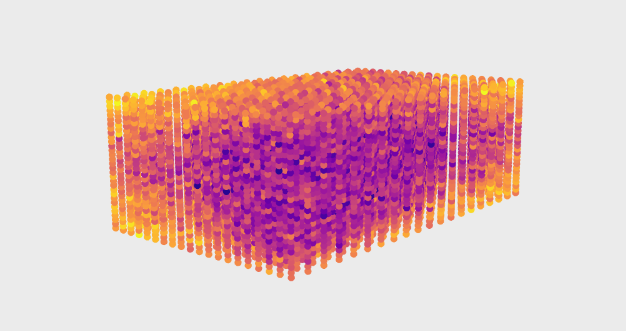

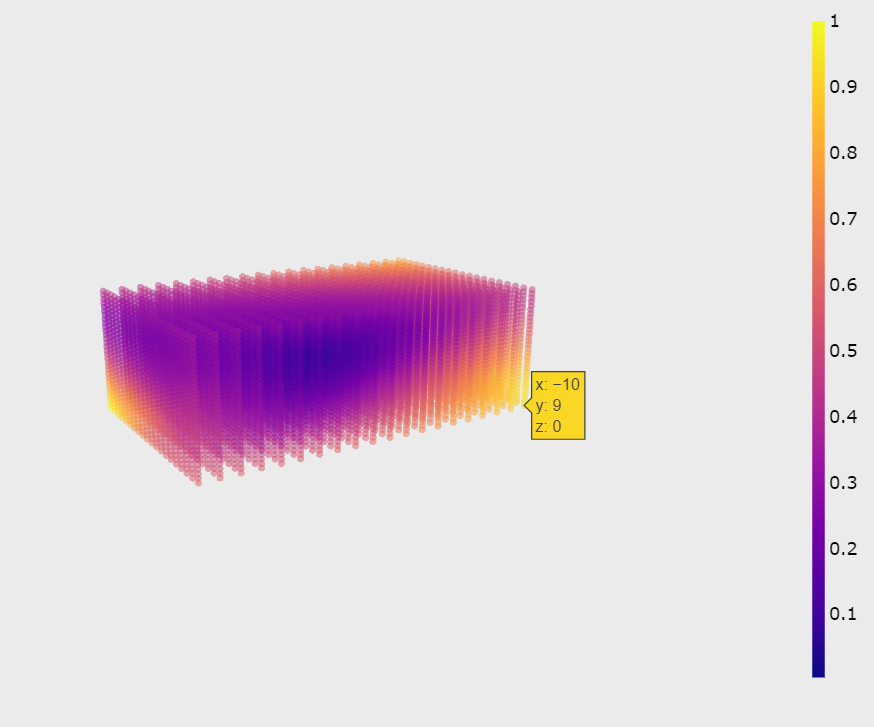

- Cost field

- Deception estimate[有偏估计]

How to fix that shit

# Tr = np.dot(Tr, np.vstack((T,[0,0,1])))

Tr = np.matmul(np.vstack((T1, [0,0,1])), Tr)

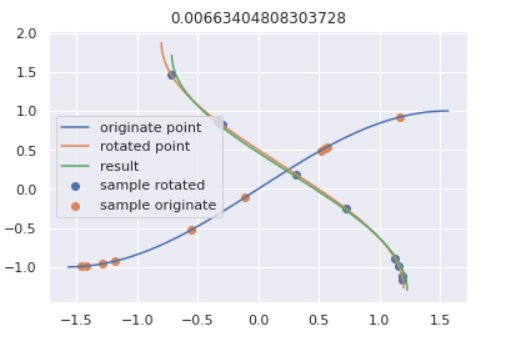

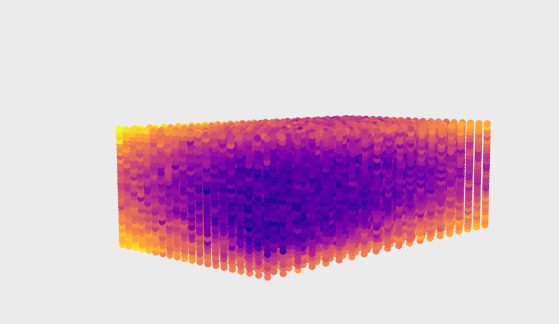

- After fixed

sample_size: [2,5,10,20,40,80,160]iter_num: 20- reduce the estimated bias

- Difference of sample size

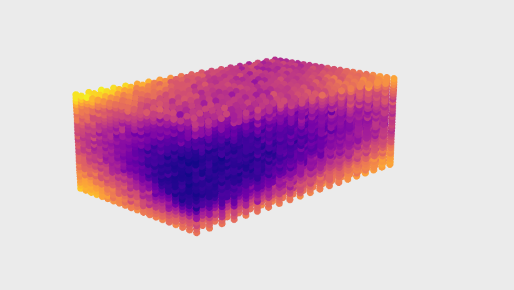

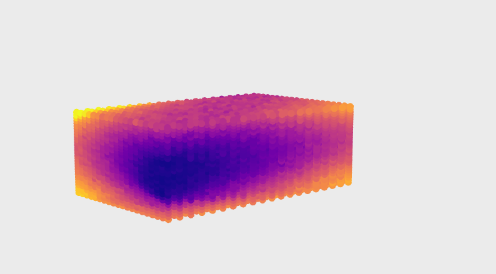

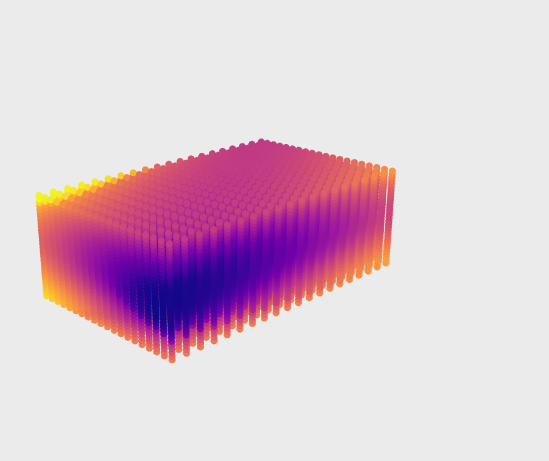

- Cost field

Sample size=2Sample size=5Sample size=10Sample size=20Sample size=160

Fitting

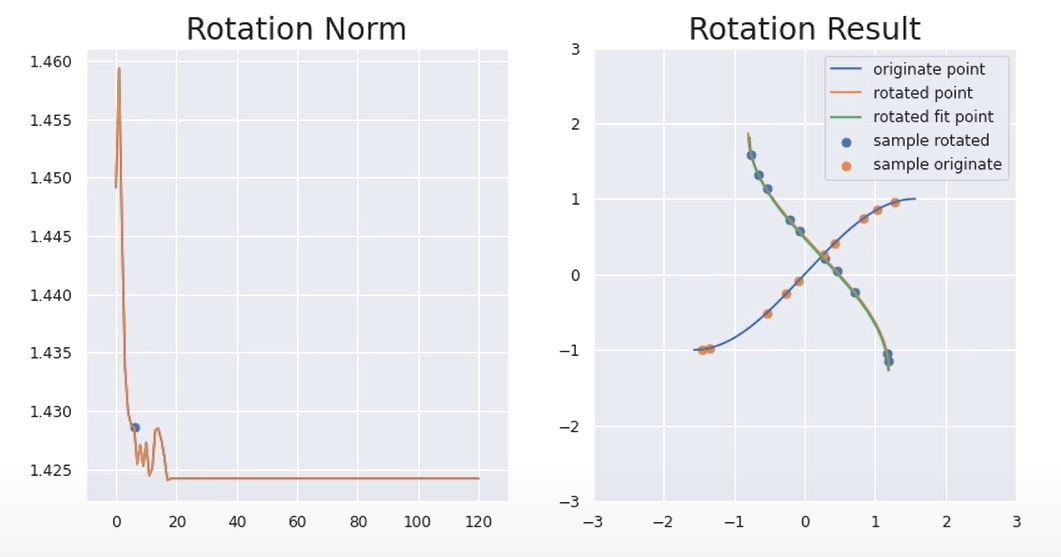

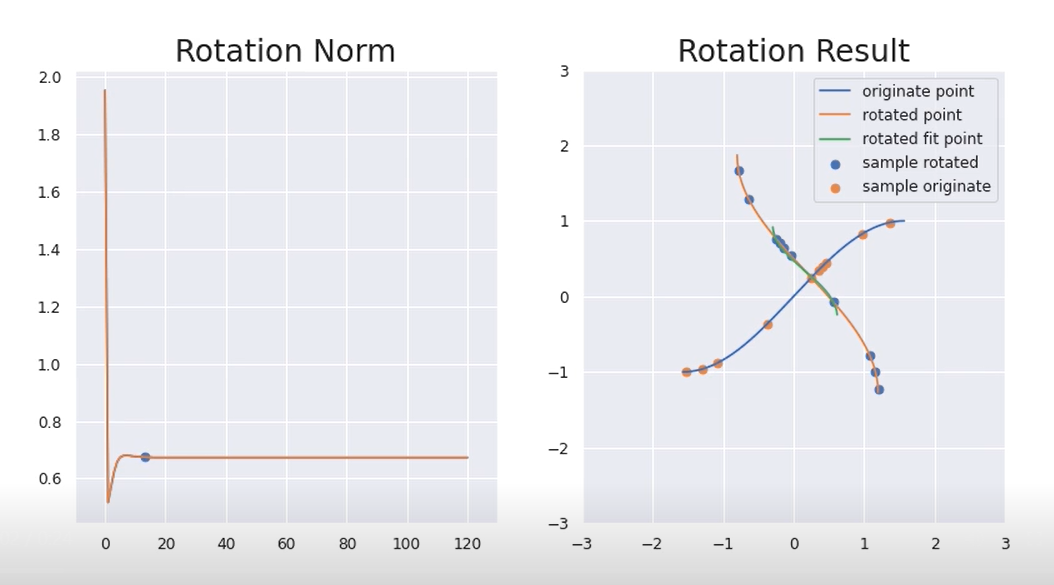

- case 1

sample_size=10Nelder-Meaditer_num=120p=[0.1, 0.3, np.pi/2.2]

- case2

sample_size=10Nelder-Meaditer_num=120p=[-1, -0.9, 0]

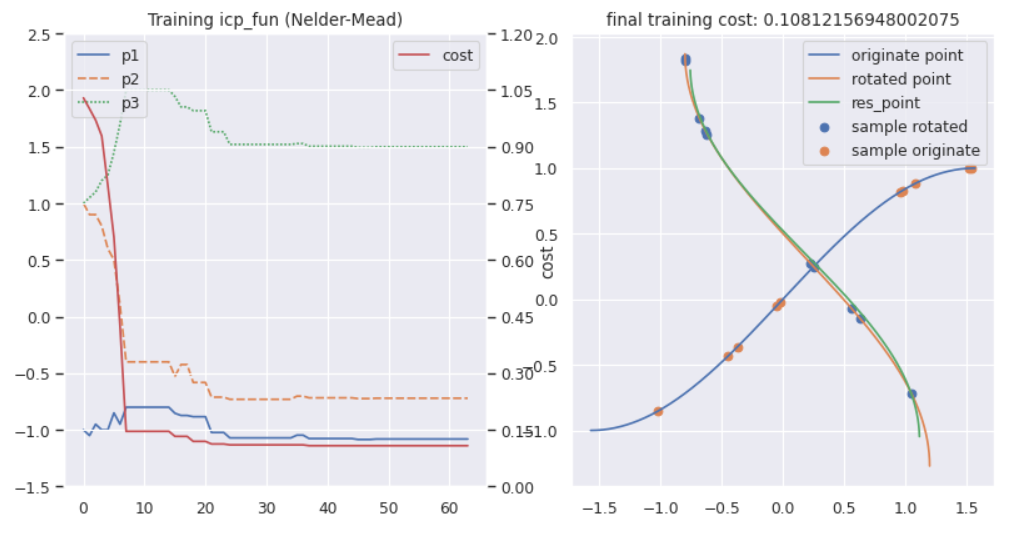

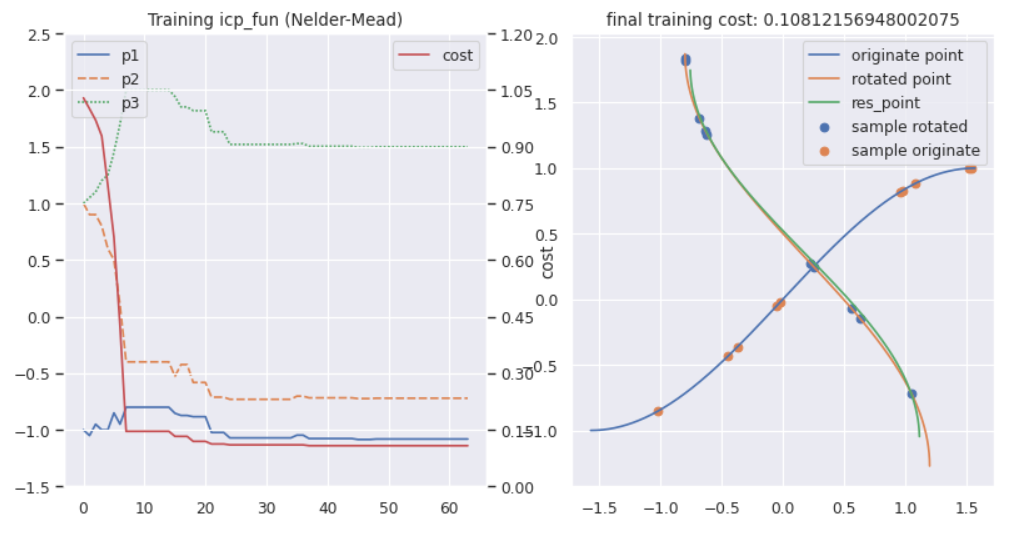

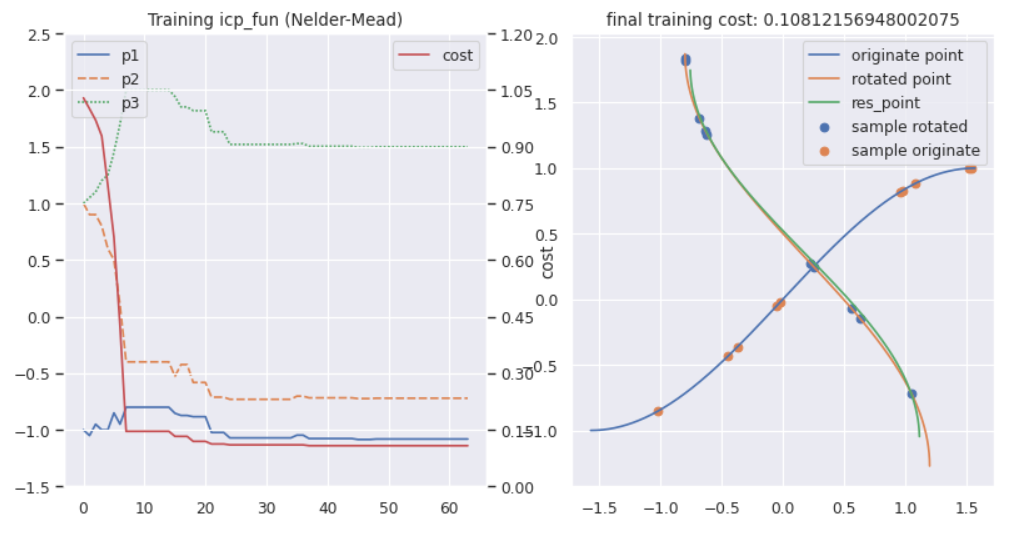

Training

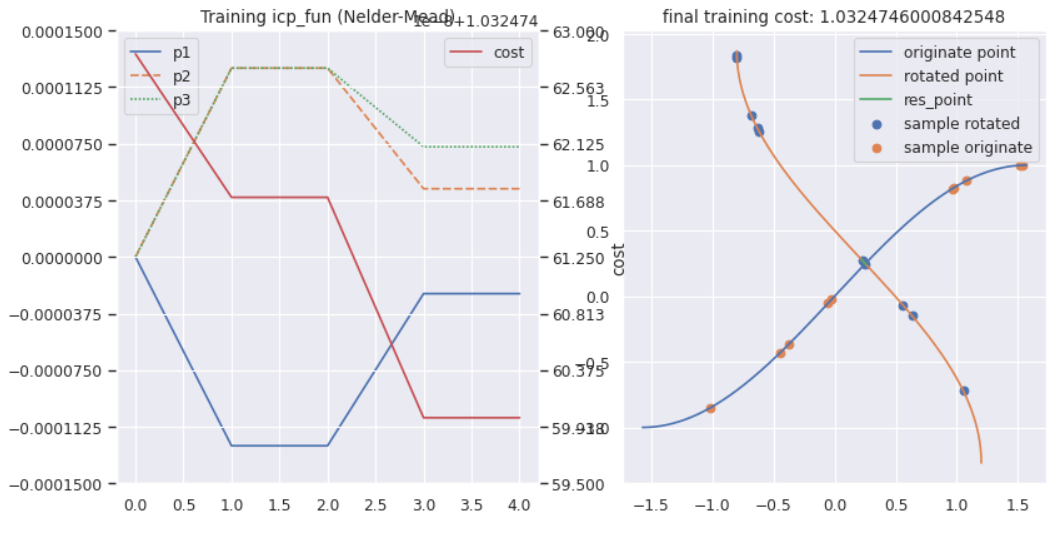

- case1

sample_size=10Nelder-Meaditer_num=20init_x=[0,0,0]

- case2

sample_size=10Nelder-Meaditer_num=20init_x=[1,1,1]

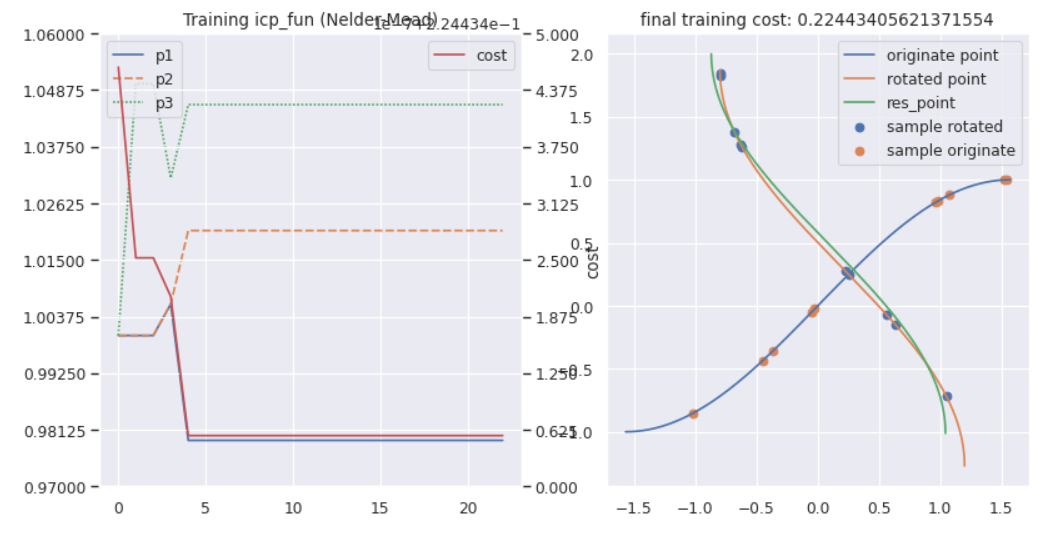

- case3

sample_size=10Nelder-Meaditer_num=20init_x=[2,2,2]

- case4

sample_size=10Nelder-Meaditer_num=1init_x=[1, -1, 2]

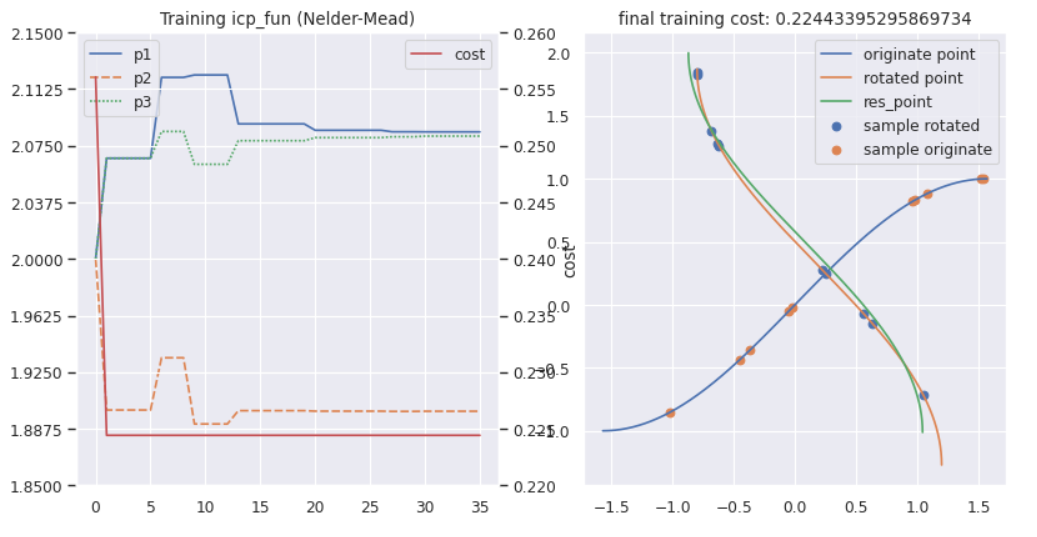

- case5

sample_size=10Nelder-Meaditer_num=2init_x=[-1, 1, 1]

- case6

sample_size=10Nelder-Meaditer_num=1init_x=[1, -1, 2]

- case6

sample_size=10Nelder-Meaditer_num=0, no affineinit_x=[10, -10, 2]